Pressure measurement plays a vital role in modern industry, scientific research, and everyday applications. In industrial production, pressure — just like temperature, flow, or level — is an essential process variable that must be monitored and controlled. Its measurement accuracy directly affects energy efficiency, production safety, and overall economic performance.

For instance, steam turbine generator systems require high-temperature and high-pressure steam. During operation, numerous pressure instruments ensure the system’s stability and efficiency. In the chemical industry, accurate pressure control determines reaction outcomes. For example, in ammonia synthesis, maintaining the correct pressure ensures the chemical reaction proceeds at optimal yield. Low pressure results in poor conversion efficiency, while excessive pressure increases safety risks.In scientific research and modern technology, pressure influences the structural or phase transformation of materials. Certain metals can only be refined under ultra-low pressure conditions to achieve high purity. Artificial diamond production, on the other hand, requires ultra-high pressures reaching the gigapascal (GPa) range. Even in emerging technologies such as thin-film coatings, vacuum and pressure control are critical.

Under high pressure, the physical properties of fluids, metals, and other materials — such as compressibility, viscosity, electrical conductivity, and crystal structure — exhibit behaviors that differ from those under standard atmospheric conditions. Therefore, advancements in pressure measurement technology are crucial for understanding and managing these changes.

In defense and aerospace industries, pressure monitoring is equally critical. Applications include wind tunnel testing, aircraft surface pressure mapping, fuel and lubrication system control, hydraulic and pneumatic systems, jet thrust control, and altitude measurement. In all these cases, precise pressure instrumentation is indispensable.

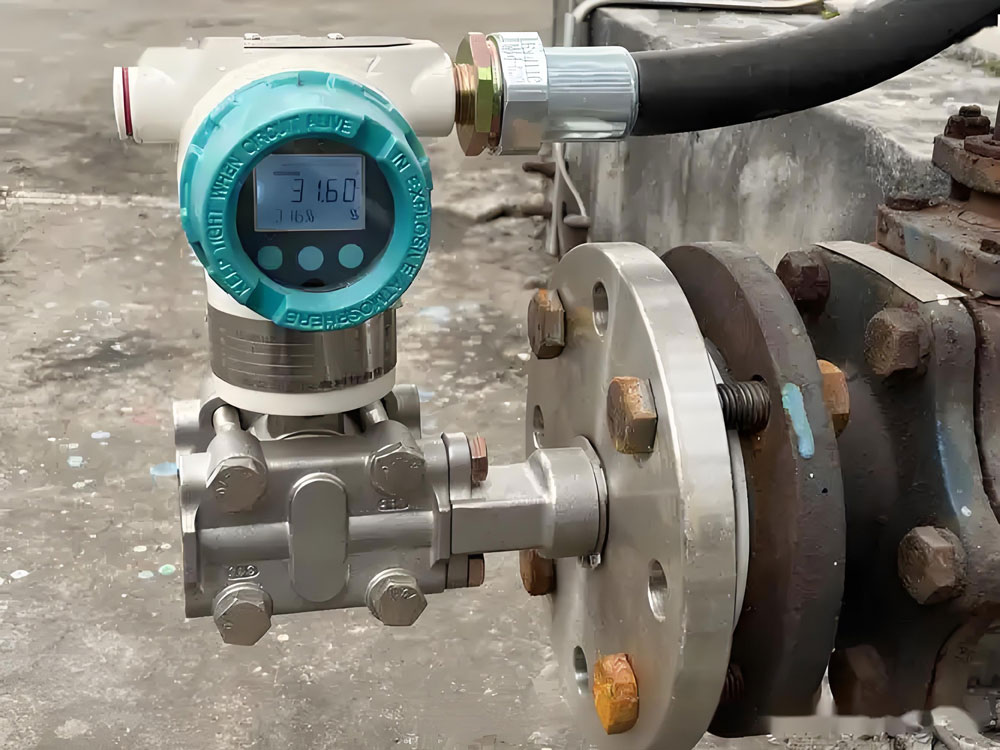

Demanding of Pressure Transmitters

With the rapid progress of industrial production and scientific research, the demand for pressure measurement has expanded dramatically. Modern industries require instruments capable of measuring both ultra-high pressures and micro-pressures with extreme accuracy.

Pressure measurement covers a wide variety of applications: gases and liquids, static and dynamic pressure, clean and viscous media, and even toxic or lubricated fluids. Engineers must also ensure accurate transmission of pressure values from reference standards to working instruments, while developing new methods and equipment to meet emerging requirements.

In physics, pressure refers to the force acting per unit area on a surface. Mathematically, this relationship is expressed as:

When the applied force is non-uniformly distributed, pressure can be defined as:

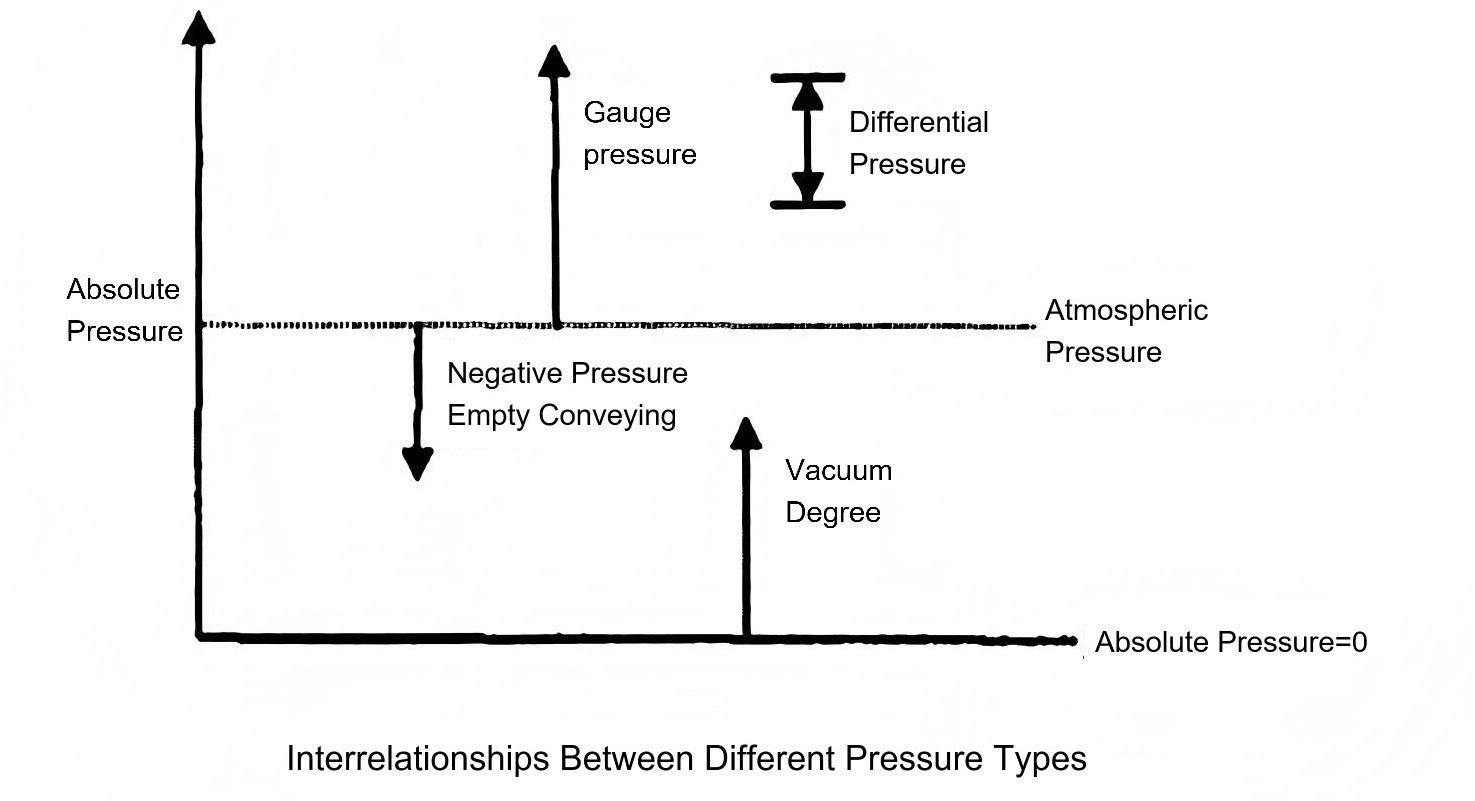

In engineering practice, pressure is often expressed in several different ways depending on reference conditions and measurement methods.

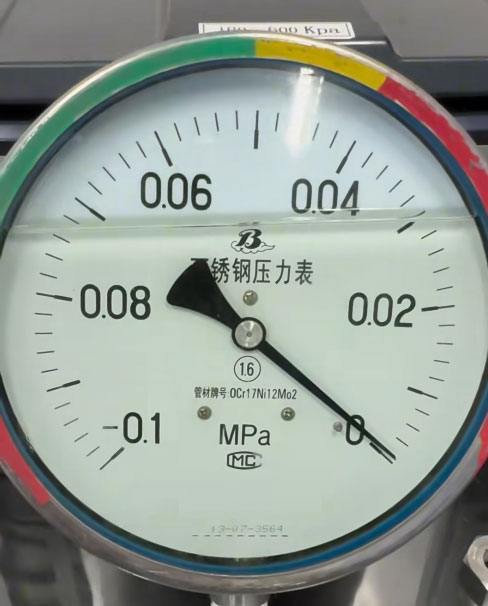

Atmospheric pressure (p₀) is the force exerted by the weight of the air above the Earth’s surface. It varies with altitude, latitude, temperature, and weather conditions.

Absolute pressure (pₐ) represents the total pressure exerted by a fluid, gas, or vapor at a specific point, including atmospheric pressure.

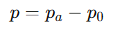

Gauge pressure (p) is the pressure measured relative to atmospheric pressure, i.e.:

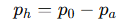

When the absolute pressure is lower than atmospheric pressure, the difference is called vacuum pressure (pₕ), expressed as:

The degree of vacuum indicates how much lower the absolute pressure is compared to atmospheric pressure. In most industrial applications, instruments are designed to measure either gauge pressure or vacuum pressure directly.

The relationships among different types of pressure are illustrated conceptually in Figure 1-1.

Figure 1-1: Relationships among absolute pressure, atmospheric pressure, gauge pressure, and vacuum pressure.

From the definition of

pressure, it is clear that pressure is a derived quantity expressed as force

per unit area.

According to international standards (SI), the basic unit of pressure is the Pascal

(Pa), defined as:

Despite the universal adoption of the Pascal, several traditional and industry-specific units remain in use across various sectors. The most common ones include:

Defined as the pressure produced by a 1 kilogram-force acting on 1 cm², denoted as kgf/cm².

Represents the pressure exerted by a 760 mmHg mercury column at 0°C and standard gravity (9.80665 m/s²). It is commonly abbreviated as atm.

The pressure exerted by a 1 mm mercury column under standard conditions.

The pressure produced by a 1 mm water column at 4°C.

Additional pressure units include bar, meter of water column (mH₂O), and pound per square inch (psi or lbf/in²).

For ease of conversion, Table 1-1 provides conversion coefficients between different pressure units.

Pressure measurement forms the

backbone of industrial automation, scientific experimentation, and modern

engineering. Understanding the various pressure types, units, and conversion principles ensures accuracy, safety, and efficiency across

all technical disciplines. As new technologies demand higher precision and

broader measurement ranges, advancements in pressure measurement instruments

will continue to drive progress in both industry and research.

Table 1-1 Pressure Unit Conversion Factors

| Unit Name | Symbol | Pa | bar | mmH₂O | mmHg | atm | kgf/cm² | lbf/in² (psi) | torr |

| Pascal | Pa | 1 | 1.0×10⁻⁵ | 1.01972×10⁻⁴ | 7.50062×10⁻³ | 9.86923×10⁻⁶ | 1.01972×10⁻⁵ | 1.4504×10⁻⁴ | 7.50062×10⁻³ |

| bar | bar | 1.0×10⁵ | 1 | 1.01972×10³ | 7.50062×10² | 9.86923×10⁻¹ | 1.01972×10 | 14.504 | 750.062 |

| mmH₂O | mmH₂O | 9.80665 | 9.80665×10⁻⁴ | 1 | 7.355×10⁻² | 9.678×10⁻⁵ | 1.0197×10⁻³ | 1.4223×10⁻² | 7.355×10⁻² |

| mmHg | mmHg | 1.33322×10² | 1.33322×10⁻³ | 13.5951 | 1 | 1.316×10⁻³ | 1.3595×10⁻² | 1.959×10⁻¹ | 1 |

| Standard atmosphere | atm | 1.01325×10⁵ | 1.01325 | 1.0332×10³ | 7.6×10² | 1 | 1.0332×10 | 14.696 | 760 |

| Technical atmosphere | kgf/cm² | 9.80665×10⁴ | 9.80665 | 9.678×10² | 7.355×10¹ | 9.677×10⁻² | 1 | 14.223 | 735.6 |

| Pound-force per square inch | lbf/in² | 6.89476×10³ | 6.89476×10⁻¹ | 7.0306×10¹ | 5.1713 | 6.8046×10⁻² | 7.0306×10⁻² | 1 | 51.715 |

| torr | torr | 133.322 | 1.33322×10⁻³ | 13.5951 | 1 | 1.316×10⁻³ | 1.3595×10⁻² | 1.93386×10⁻² | 1 |

Pressure and DP Transmitter with Flange Diaphragm2017/04/12Flange diaphragm Transmitter.

Pressure and DP Transmitter with Flange Diaphragm2017/04/12Flange diaphragm Transmitter. Pressure Transmitter with Remote Diaphragm seals2017/04/12Remote diaphragm for corrosive or viscous fluids.

Pressure Transmitter with Remote Diaphragm seals2017/04/12Remote diaphragm for corrosive or viscous fluids. Differential Pressure Transmitters2018/01/04Monocrystalline silicion sensor;

Differential Pressure Transmitters2018/01/04Monocrystalline silicion sensor;  Capacitive pressure sensor 33512018/12/07Capacitive pressure sensor or differential pressure sensor 3351; Accuracy: ± 0.1%; 0-40Mpa;

Capacitive pressure sensor 33512018/12/07Capacitive pressure sensor or differential pressure sensor 3351; Accuracy: ± 0.1%; 0-40Mpa; Ceramic Pressure Sensor2025/04/02Ceramic capacitive pressure sensor.

Ceramic Pressure Sensor2025/04/02Ceramic capacitive pressure sensor. Low Pressure Transmitters2025/04/03Low pressure transmitter :16-60 mbar .

Low Pressure Transmitters2025/04/03Low pressure transmitter :16-60 mbar .